Galton Board

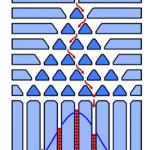

At the end of the 19th century, the English polymath Sir Francis C. Galton (1822–1911) developed an arrangement to demonstrate the so-called binomial distribution. This arrangement was later called the Galton board in his honour. A version of this has been realised in ADVENTURE LAND MATHEMATICS:

Between two glass plates, several 50-cent coins are fixed with three pins each and arranged evenly so that — as an overall structure — an equilateral triangle of twelve “cascades” results (see figure 1 below):

![Rendered by QuickLaTeX.com \[\sum_{n:a\leq\frac{n-\mu}{\sigma}\leq b}{p_N(n)}\to\frac{1}{\sqrt{2\pi}}\int_a^b{e^{-z^2/2}}dz\]](https://erlebnisland-mathematik.de/wp-content/ql-cache/quicklatex.com-3d11202630dfbf0503496343e61ac8e9_l3.png)

![Rendered by QuickLaTeX.com \[\left\{\frac{-Np}{\sqrt{Np(1-p)}},\ldots,\frac{N(1-p)}{\sqrt{Np(1-p)}}\right\}.\]](https://erlebnisland-mathematik.de/wp-content/ql-cache/quicklatex.com-cf8f9b54c8da7bedac00b3f127300f47_l3.png)

![Rendered by QuickLaTeX.com \[\sum_{k=0}^N p_N(k)\delta_{\frac{k-\mu}{\sigma}},\]](https://erlebnisland-mathematik.de/wp-content/ql-cache/quicklatex.com-4aca709a813575b1fe763325d9e978c2_l3.png)